In recent months and years, there have been increasing calls to address challenges of satellite network scalability and resilience against threats, both natural and manmade. To better understand potential impact to satellite system and network architectures, we must first examine implications of the unique conditions and common use case requirements. While similarities exist with terrestrial communication network and data center evolution, the need for fluidity in how data is managed is uniquely heightened.

In this series of micro blogs, we will talk about a sequence of events that led to a revolution in how space systems are being architected for “New Space” applications, resulting in the need for onboard data storage, and why data fluidity is critical to achieve responsive, autonomous, mission-adaptive functionality for long term satellite network survivability.”

A pivotal change in US Military doctrine came about thanks to General William Shelton, tracing back to a speech in 2014. During that speech, he said, “U.S. strategic military satellites are vulnerable to attack in a future space war and the Pentagon is needs to move to smaller distributed satellites”. Shelton indicated that the United States’ highest priority military satellites are those providing resilient communications and advanced missile warning. If any of these critical satellites were attacked and destroyed in a conflict or crisis, the loss “would create a huge hole in our capability” to conduct modern, high-tech warfare, not to mention the system price tag of ~$1 billion each.

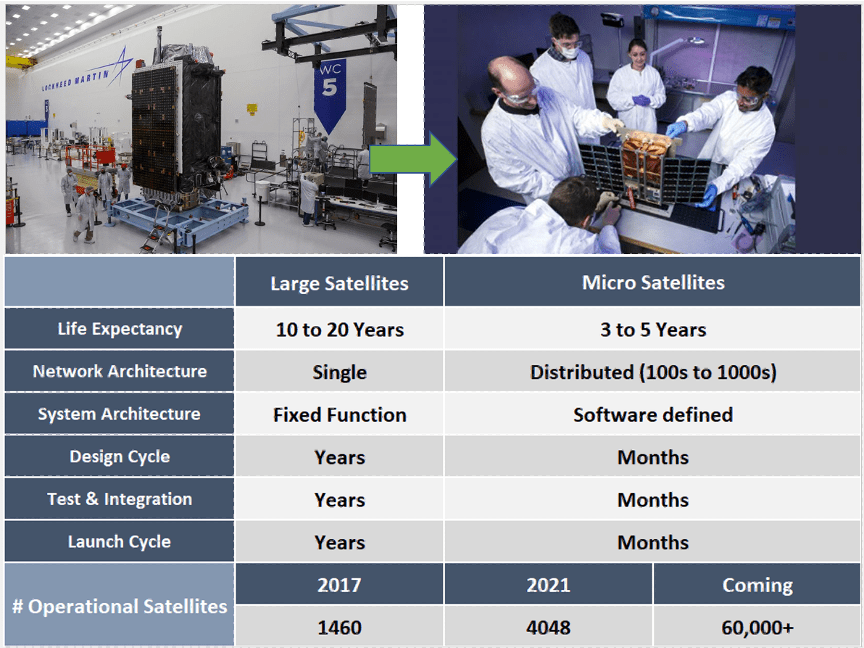

The policy of loading large satellites with numerous types of sensors and missions worked well in the past, but in the increasingly contested space environment, a new strategy and architecture are needed. This new strategy proposed by the general called for “architectural alternatives” that were being studied by both military and industry at the time, shifting away from large, multiple-payload satellites in favor of a larger number of smaller, simpler, and more nimble systems. The impact would improve resilience to threats and be less expensive for tighter defense budgets. “By distributing our space payloads across multiple satellite platforms, we increase our resiliency to the cheap shot or premature failure,” Shelton said. “At a minimum, it complicates our adversaries’ targeting calculus.”

A new satellite constellation based on a more resilient, distributed network architecture also calls for new satellite system architectures and operating modes. To provide matrixed coverage without a single point of failure, like those employed with cloud computing terrestrially, satellites must communicate and/or reroute to adjacent domains to provide continuous linkage of the network. This network and system architecture transition, in tandem with the need to respond to threats in real time without word from Houston, requires distributed intelligence, which in turn drives the need for distributed data buffers and large storage, which will be the focus of our next blog in the series.