In recent months and years, there have been increasing calls to address challenges of satellite network scalability and resilience against threats, both natural and manmade. To better understand potential impact to satellite system and network architectures, we must first examine implications of the unique conditions and common use case requirements. While similarities exist with terrestrial communication network and data center evolution, the need for fluidity in how data is managed is uniquely heightened.In this series of micro blogs on the “The Fluidity of Data in Space”, we will talk about a sequence of events that led to a revolution in how space systems are being architected for “New Space” applications, resulting in the need for onboard data storage, and why data fluidity is critical to achieve responsive, autonomous, mission-adaptive functionality for long term satellite network survivability.

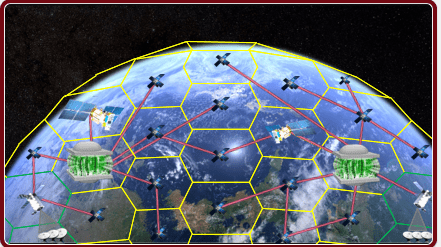

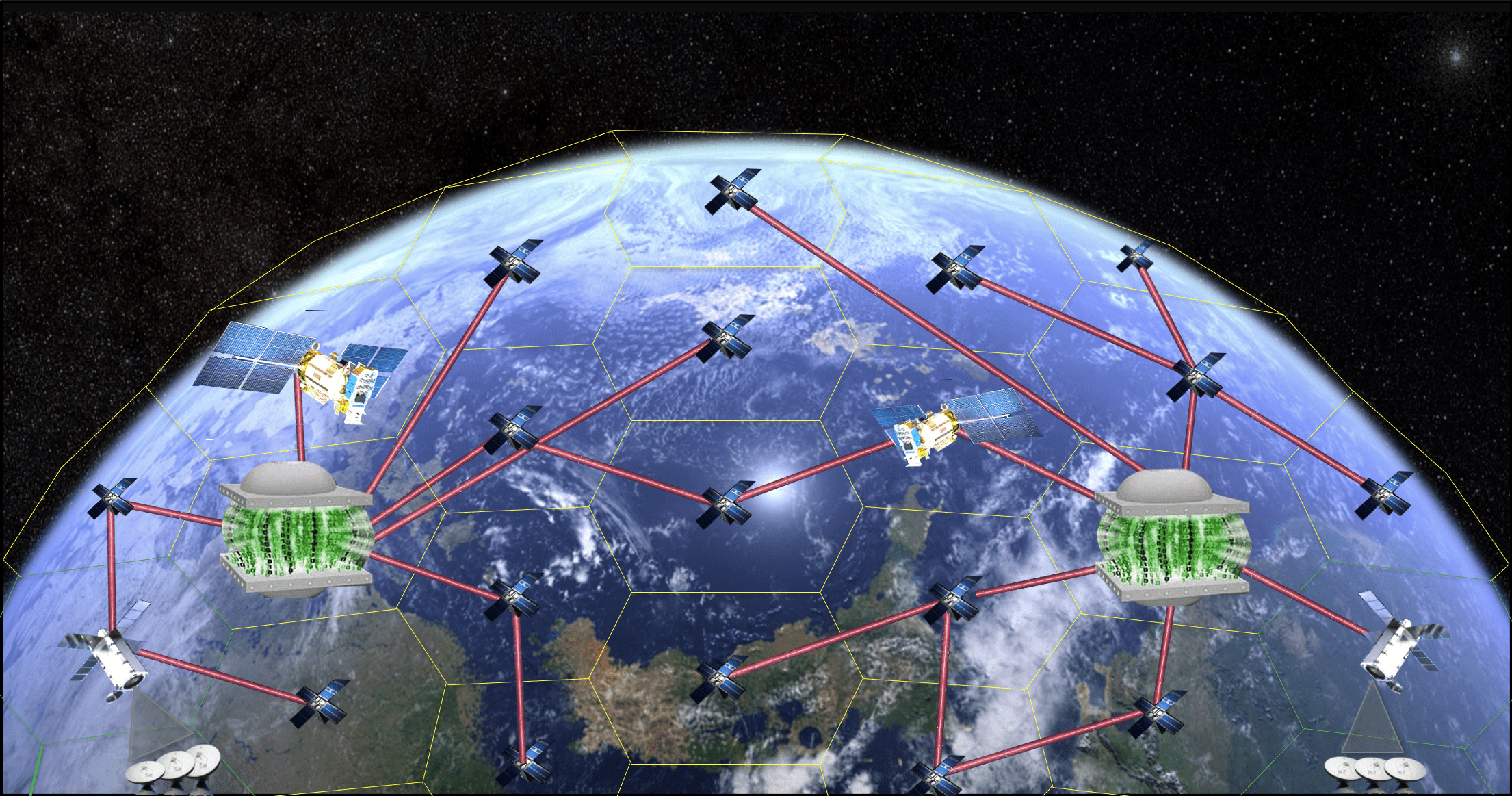

As we saw in the previous blog, the astronomical growth in the number of IoT nodes in Space (Micro Satellite Constellations) with each collecting data, is leading to a skyrocketing amount of data that requires dispatch in one or more of the following ways:

Which of the above paths for data dispatch depends largely on the use case. As mentioned in the previous blog, there are two overarching use case categories which dictate how we think of the corresponding data types:

Satellites have historically retrieved information from onboard sensors and stored in various types of memory and disk drives, combining radiation susceptible memory such as flash technology and rotating media. With the increasing number of onboard sensors with higher resolution capability, the data volume for logging this information is increasing. This stored data is typically used to drive insights over time, such as weather patterns, intelligence information, or generating an AI training model for a variety of applications.

Another dynamic becoming increasingly clear is the need for more real time responsiveness of satellite technology in order to deal with threats, both natural and manmade. Satellites have historically leveraged ruggedized microelectronics due to the obviously remote and challenging environmental conditions of space but have been ill equipped to deal with rapidly changing dynamics presented by things like space junk, solar flares, meteors, or intentional threats like weapons systems. Recently revealed by NASA scientists, an entire wave of 40 SpaceX satellites were destroyed by an unexpected and unpredictable solar storm. With increasing geopolitical tensions combined with advancements in weapons systems of adversarial nations raising alarms about the vulnerability of military and commercial satellite constellations, heightened focus is being placed on the ability of satellites to interpret threats and respond to them in real time. As a result, ensuring that this more transient data is buffered immediately and processed rapidly for decision making is critical. In this scenario, latency of the data buffering processing is more critical than the volume as it does not need to be stored and can actually be discarded.

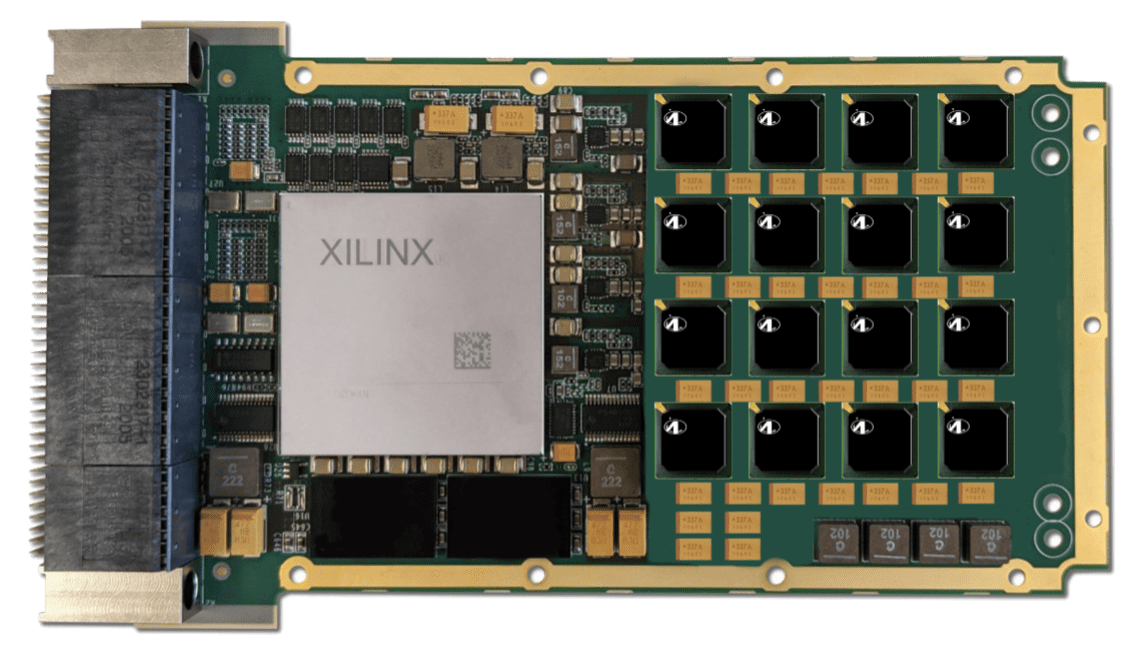

Now that we have clarified some use cases for transient vs stored data, we will examine the system level impact for these evolving requirements. Each satellite node could be collecting real time data at a rate of ~8 GB/day. Assuming this data is buffered, an ideal solution would of reasonably high performance in terms of latency, inherently non-volatile, radiation immune and with near infinite read and write cycle endurance, particularly with AI algorithms being increasingly write-intensive. Given this unique set of requirements, the best solution would be an 8GB MRAM memory module as shown below, though we will examine exactly why this is in a follow-on chapter. For now, focusing on the “buffer model”, as we decide what to do with the incoming data, the solution is ideal as the data is non-volatile and accessible with no wait states, impervious to power outages due to solar storms, brown outs, limited charging, etc. If the buffer starts to fill up, the satellite can communicate with an adjacent satellite equipped with a micro data center for extra storage using high speed optical links to rebalance the data load of the network.

In this 8GB MRAM module, the data is used and processed by the co-processor’s AI Engine, shown in this example as a Xilinx/AMD Adaptive SoC. The data streams from multiple sensors can be fused, an action can be taken by the processor based on the data analyzed and finally the data can be discarded. The goal is to support continuous real time analysis of this transient data, but there is generally no need to store it…analyze, act and then move on.

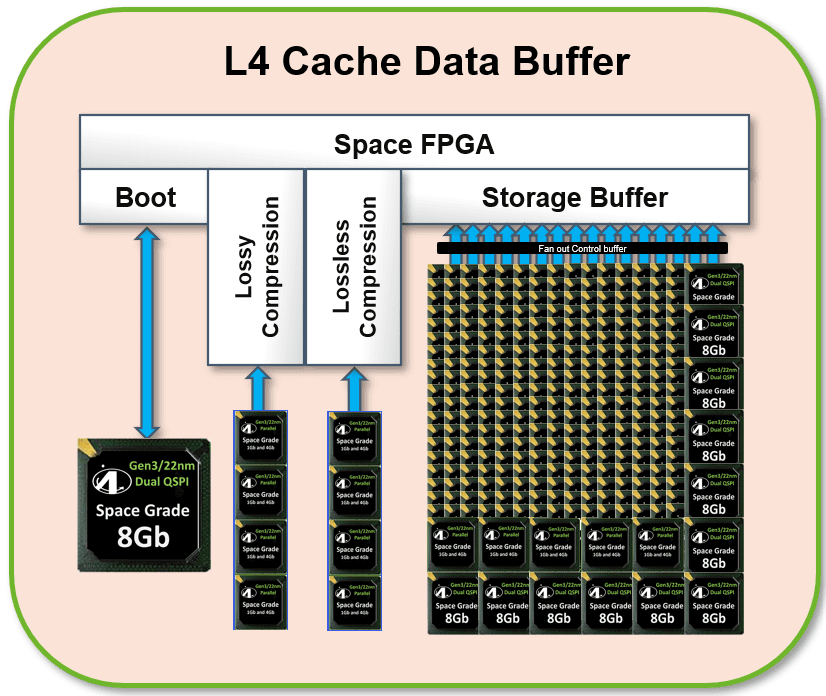

If the data is to be stored for long term analysis as with the data logging use case such as the creation of an AI training model, it is sent to the adjacent micro data center with storage capacities on the order of Terabytes. The ideal solution for this is also an MRAM array, but with 2 differences given the data is kept for the long term: access speed is not as critical, and the data can be compressed inline using a compression scheme. A typical compression engine could add around 15 – 20 watts of power to the processing element (depending on level of compression loss allowed), which would be significant for a space-based application relying on limited sun exposure for power. However, as this is not a time critical use case, if the incoming data is buffered first, the compression and commitment of data to the storage array could be performed when power is readily available (e.g., when power cells are fully charged and the solar panels are running), thereby maximizing efficiencies with a tailored profile.

The following picture shows a representative 8GB MRAM memory module architecture using 2 different types of MRAM devices from Avalanche Technology, denoted as “Persistent SRAM” or “P-SRAM™” given the similar interface characteristics: